I explained the gains of optimizing the PDCCH (control part) of the LTE subframe in my previous article. Let’s have a look at the data part (PDSCH) and find out various ways to improve it’s efficiency. The spectral efficiency is simply the number of bits transmitted over a frequency bandwidth in a specific time and is measured in bits/s/Hz. It is proportional to throughput as the throughput is also bits per time transmitted in a certain bandwidth. From LTE’s perspective, if the number of bits transmitted in a subframe (time) over a specific number of Resource Blocks (frequency bandwidth) is high, then it will correspond to higher throughput and higher spectral efficiency. Let’s understand the various factors impacting the spectral efficiency and ways to perform LTE throughput optimization.

– Signal To Noise & Interference Ratio:

The most basic and common factor that controls the spectral efficiency and throughput is the SINR (Signal to Noise and Interference Ratio). If the SINR of a network is bad, then that puts a limit on the throughput gain that it can achieve. So, the first thing to verify is the average SINR of the network. Let’s check some of the factors that impact SINR

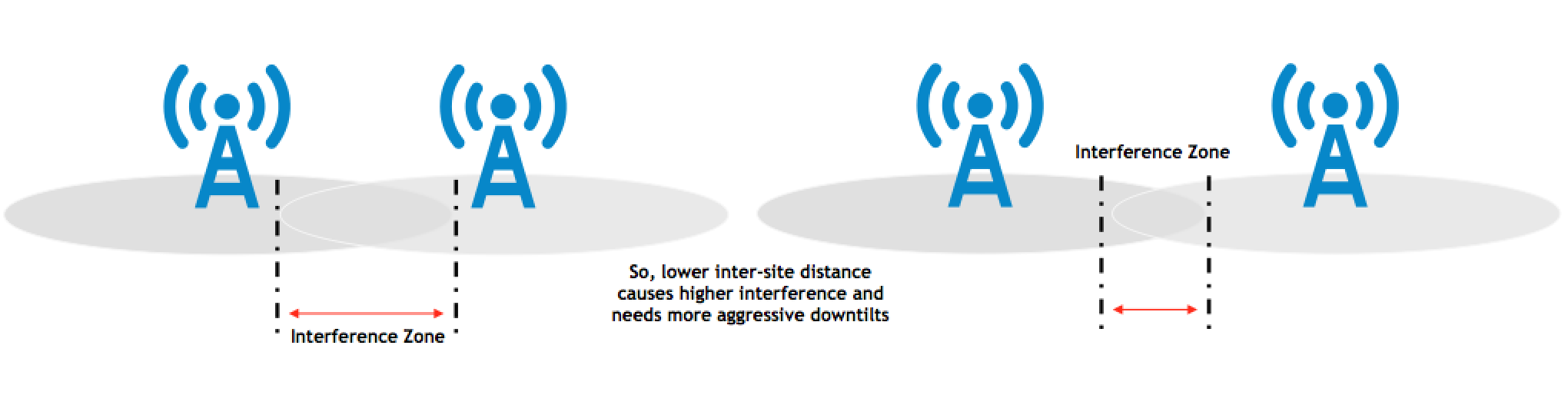

Inter-site distance

This one is a basic thing. If sites are too close to each other, they will have a higher tendency to interfere with each other and will require aggressive down-tilts to limit overshooting. The distance is something that is usually fixed as LTE sites mostly use the previously deployed network. So, there is not much to do at this level other than downtilts to improve SINR and reduce overshooting.

- Electrical Tilt Ports: It is better to use antennas which assign a different RET port (electrical tilt) to LTE. That provides flexibility for optimization. If the network uses the same RET port for LTE and other RATs (3G or 2G) then any change on LTE tilt will impact the other RAT and it takes away the flexibility. So, it is a good idea to keep this in mind in the design or expansion phase.

- Pa & Pb: Another thing that can be done in case of smaller inter-site distance is to use a more balanced RS power (Reference Signal). There are two parameters in LTE Pa and Pb which define the power of the Reference Signals against the other symbols e.g. PDSCH Symbols. I will just explain with an example. If the Pa is -3 and Pb is 1, then that means that the Reference Signals will be having 3 dB higher power than the PDSCH symbols. When the inter-site distance is low, then high reference signal power can result in higher interference. If the inter-site distance is large then this configuration can be helpful as a 3dB Reference Signal boost will improve coverage as LTE coverage is controlled with RSRP and RSRP is the direct outcome of RS power. However, in case of small inter-site distance, Pb and Pa values of 0 might provide a more optimized solution as in this case, the RS power will not be boosted compared to the PDSCH symbols. Moreover, the PDCCH/PDSCH symbols in which Reference Signals are present will have a slightly higher power for 2 and 4 antenna port systems. This happens because previously with 0,-3 configuration, the RS were taking the extra available power but now with the 0,0 configuration, the extra power is used by the other channels instead of RS. So, that improves the credibility of PDSCH and can result in better throughput results. This is a big topic so I am just touching it here and will cover this in more details in the future articles.

Load & Utilization

Second factor is the load in the area or cluster. Higher the load, higher the interference to the neighbouring cells. As the load increases, the power per Resource Element increases which will result in higher aggregate power in the area increasing the RSSI. For neighbouring cell, such a power is considered interference. So, if the load increases above a threshold, it is better to add another carrier or if another carrier already exists, then it will be better to offload the congested carrier and shift the load to the uncongested carrier. This can be done using Load Balancing features or by tuning the cell reselection or mobility parameters.

Sometimes, the actual traffic volume is not that high but the utilization of the cell is still very high. This is usually caused due to low signal quality as the users with bad SINR will take a lot RBs at a lower modulation. In case, the traffic is not high but utilization is still high, it is a good idea to see the TA and CQI for the cell. If TA is pretty high and CQI is below 8 (value depends on the frequency layer) then it might be a better idea to physically optimize the area or cell. Introducing PDCCH optimization also helps in such cases as it can add to the PDSCH capacity relieving congestion to an extent.

PCI Planning

As described in my PCI planning article, if the adjacent cells with overlapping coverages have same PCI modulo 3, then there is a probability of RS interference between them. Such an interference will reduce the overall RS SINR and demodulation capability resulting in throughput degradation. So, it should be tried to avoid PCI modulo3 conflicts wherever possible. In FDD networks, it is better to ensure that time synchronization is not enabled as that adds a randomness to the system and PCI mod3 impact is reduced significantly.

– CQI & MCS Mapping:

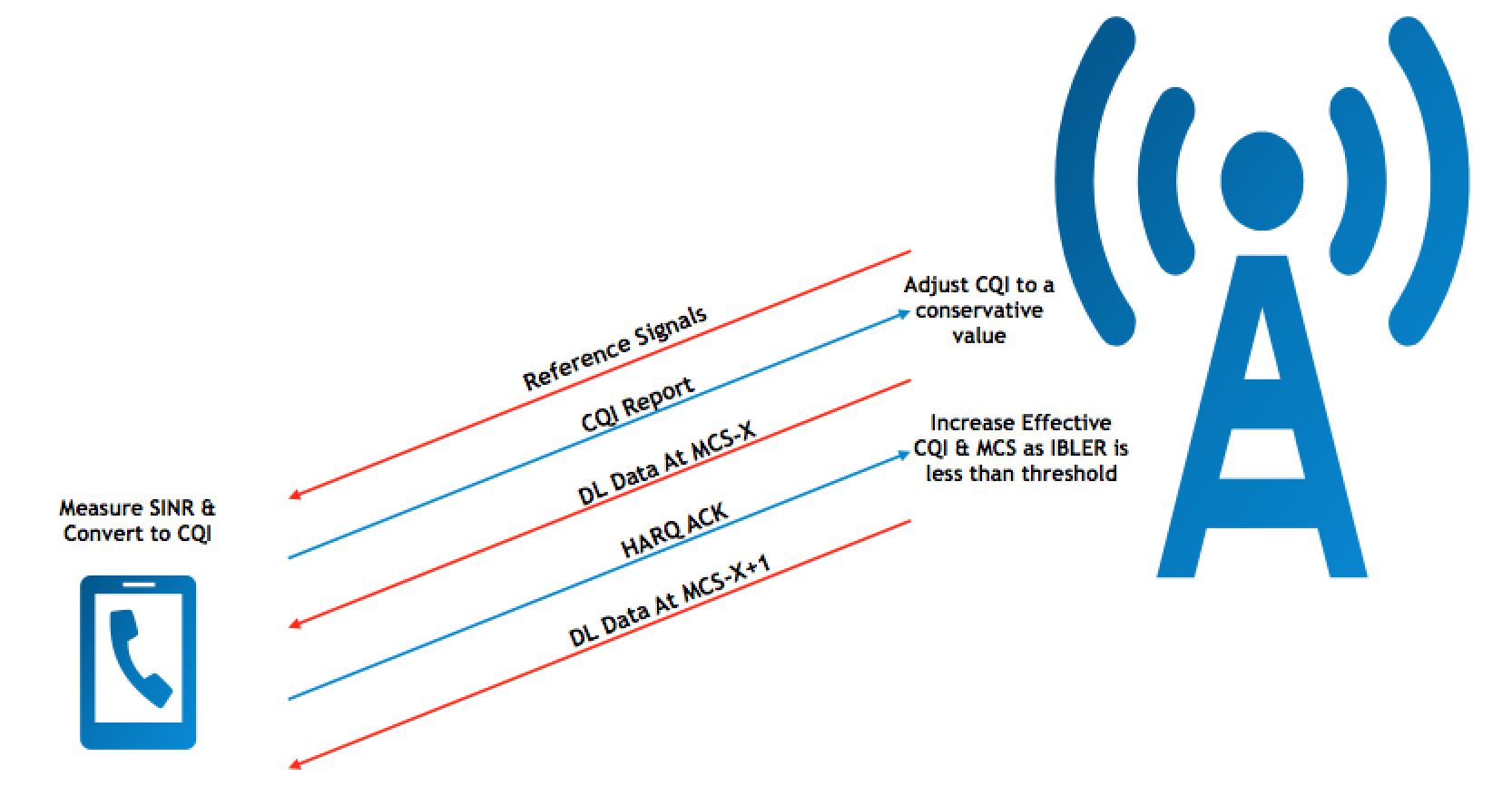

The next step is the CQI (Channel Quality Indicator). Once the UE measures it’s SINR, it will convert it to a CQI value so it can report to the eNB. The eNB will take this CQI and map it to a MCS (Modulation & Coding Scheme) value. A higher SINR will result in a higher CQI value and consequently, a higher MCS index. As MCS increases, the throughput usually increases so we need to ensure that we have the most optimum CQI and MCS indexes for each SINR value. In LTE, there are 16 CQI indexes and 32 MCS indexes. Usually, the CQI value of below 7 is considered bad and CQI value of around 10 is considered fair.

CQI Adjustment Algorithms

The eNB adjusts the raw CQI value shared by the UE to find an optimum CQI and this provides a higher spectral efficiency. There are basically two scenarios where this comes into play

Consider a UE-1 that measures its SINR value to be around 10 dB and based on that it calculates a CQI of 9 and sends it to the eNB. Another UE, let’s call it UE-2, measures its SINR value to be around 8 dB but based on that it sends CQI of 9 as the UEs have different chipsets from different vendors and can have a different CQI value for same SINR indexes. The eNB will have two UEs with same CQI value and if the eNB provides both of them with the same MCS (for example MCS20) then it is possible that the UE-1 might be able to work with MCS20 but the UE-2 will not be able to decode MCS20 properly at 8 dB SINR. So, to address this issue, the eNB maintains another index which is like the outer loop of BLER (Block Error Rate). Most of the vendors maintain a BLER target of 10%. Now consider the same scenario, both UEs get MCS20 and UE-1 works with a BLER value of 10% but the UE-2 had lower SINR so it will have a relatively higher BLER. Let’s say, the eNB calculates the BLER to be around 13% so the eNB will lower the MCS for the UE-2 and make it 19. If the BLER still remains above 10%, the eNB will reduce it further to ensure that the BLER target is maintained.

Similarly, if the UE sends a CQI value of 8 and eNB initiates downlink data with a MCS of 16 and it finds out that the BLER value is below 10%, it will increase MCS to 17 or 18 until the BLER target is achieved. This scenario will increase the spectral efficiency and the throughput.

So, we need to ensure that CQI adjustment or dynamic CQI assignment algorithms or outer loop control based on BLER is activated to achieve maximum gains from the channel.

CQI Convergence

Another important thing is that some vendors use low CQI values initially. For example, if the UE has just accessed the cell and it shares a CQI value of 9, the eNB will treat it as a CQI of 7 and a corresponding MCS will be allocated to it. Then after subsequent transmissions, the eNB will keep monitoring BLER and once the credibility of the UE’s CQI is ensured, the eNB will converge to the effective CQI. Some vendors keep this as a hard-coded algorithm while others provide parameters to tune this and then these parameters can be tuned to limit this behaviour resulting in faster convergence and higher throughputs especially for small packet data transfers. For instance, a UE which has a small amount of data accesses the cell and gets its data within two to three TTIs (subframes), then the eNB will not have enough CQI samples to converge quickly. The same UE will try again next time and the eNB will keep using a conservative CQI and MCS for such a UE. So, if the delta for initial CQI value is reduced, such UEs will get a less conservative CQI and MCS resulting in better data rates.

CQI Periodicity

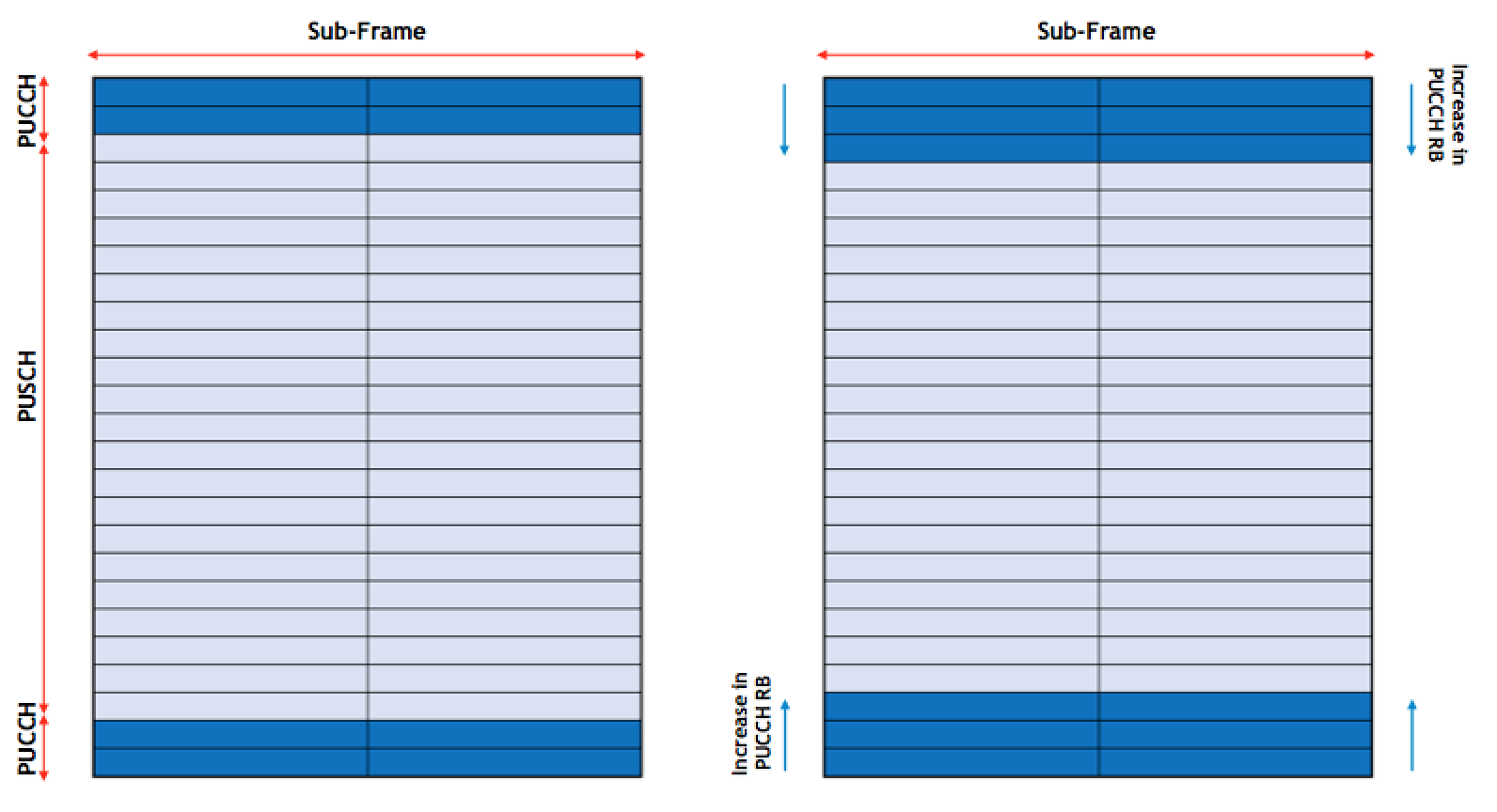

Another thing that helps is the CQI periodicity or the frequency of CQI reporting from the UE. If the UE reports CQI after a large interval, then the eNB might not have the most accurate CQI to begin with and it will take longer time to converge to the optimum MCS. Usually CQI reports are shared every 40 or 80 ms but if the UE is moving or if the channel is fluctuating then 40 or 80 ms can be considered a large interval. If we shift the CQI period to a smaller value like 20ms or 10ms, then the CQI will be more accurate and that should improve the spectral efficiency. However, the lower the interval, higher the number of CQI reports and higher the PUCCH utilization. Periodic CQI reports are sent over PUCCH in uplink so if we reduce the CQI reporting interval, that will increase the load on PUCCH. This can lead to interference on PUCCH and it can also result in RRC rejections due to PUCCH congestion. eNB needs PUCCH for CQI, HARQ & SRIs so if the PUCCH is congested, then it will have to reject new incoming access requests. This can be solved by using the following two approaches

- Adaptive or Dynamic PUCCH : This is introduced by vendors to resolve the RRC Rejections due to PUCCH overload. This allows the PUCCH to expand and it can consume more Resource Blocks if required. The down side is that the PUCCH takes the Resource Blocks from the PUSCH which can then limit the uplink throughput. However, usually the networks require higher downlink capacity so uplink can be compromised to an extent.

- Adaptive CQI Period : This is another enhancement that some vendors have. This makes the CQI reporting interval dynamic and the eNB can adjust it based on the user’s characteristics. This way, if the eNB finds a UE that has no channel fluctuation (mostly stationary), it can use longer CQI reporting interval like 80ms and eNB can reduce the interval to 10ms for a UE that has high fluctuation. This provides an optimum performance gain in CQI accuracy without impacting the PUCCH load to that extent.

There is another type of CQI reports known as Aperiodic CQIs but we will discuss that in the next episode of the throughput optimization.

Adaptive BLER Targets

Firstly, lets understand the concept of BLER. It can be divided into two categories:

- Initial BLER: When the eNB sends data to the UE and UE is unable to decode it, then it will send a HARQ NACK to the eNB. A NACK means that the eNB will have to retransmit the data and this NACK is considered IBLER or Initial Block Error.

- Residual BLER: If the UE is unable to decode the data even after retransmission, the UE will send another NACK and the eNB will have to retransmit again. However, there is a limit to these retransmissions and usually they are configurable. Commonly, these retransmissions are set to 4 and after 4 retransmissions, the eNB will not retransmit at HARQ level and consider this as a Residual Block Error.

The BLER target is maintained by the IBLER so this means that the eNB tries to maintain an IBLER of 10% for each UE. RBLER is usually very low and it is supposed to be less than 0.5%. The question may arise that why don’t we reduce the IBLER further and make it low as that should reduce retransmissions. The problem here is that lowering IBLER means that we need to lower the MCS. Even a very low MCS will not ensure a linear decrease in IBLER but it will degrade throughput excessively. So, various simulations and field trials were done to come up with an optimum target of 10% for IBLER which is followed by most of the vendors.

However, recently it has been found that BLER target of 10% works fine in fair conditions but when the radio conditions are bad or good, other BLER targets provide higher gains. For instance, if the radio conditions are bad, a BLER target of 10% keeps the MCS very conservative and increasing the BELR target, increases the MCS and it provides higher throughput gains. So, such parameters can be tuned if available to get better results.

– Mobility Strategy:

One thing that can really help in increasing the throughput is the optimum mobility strategy.

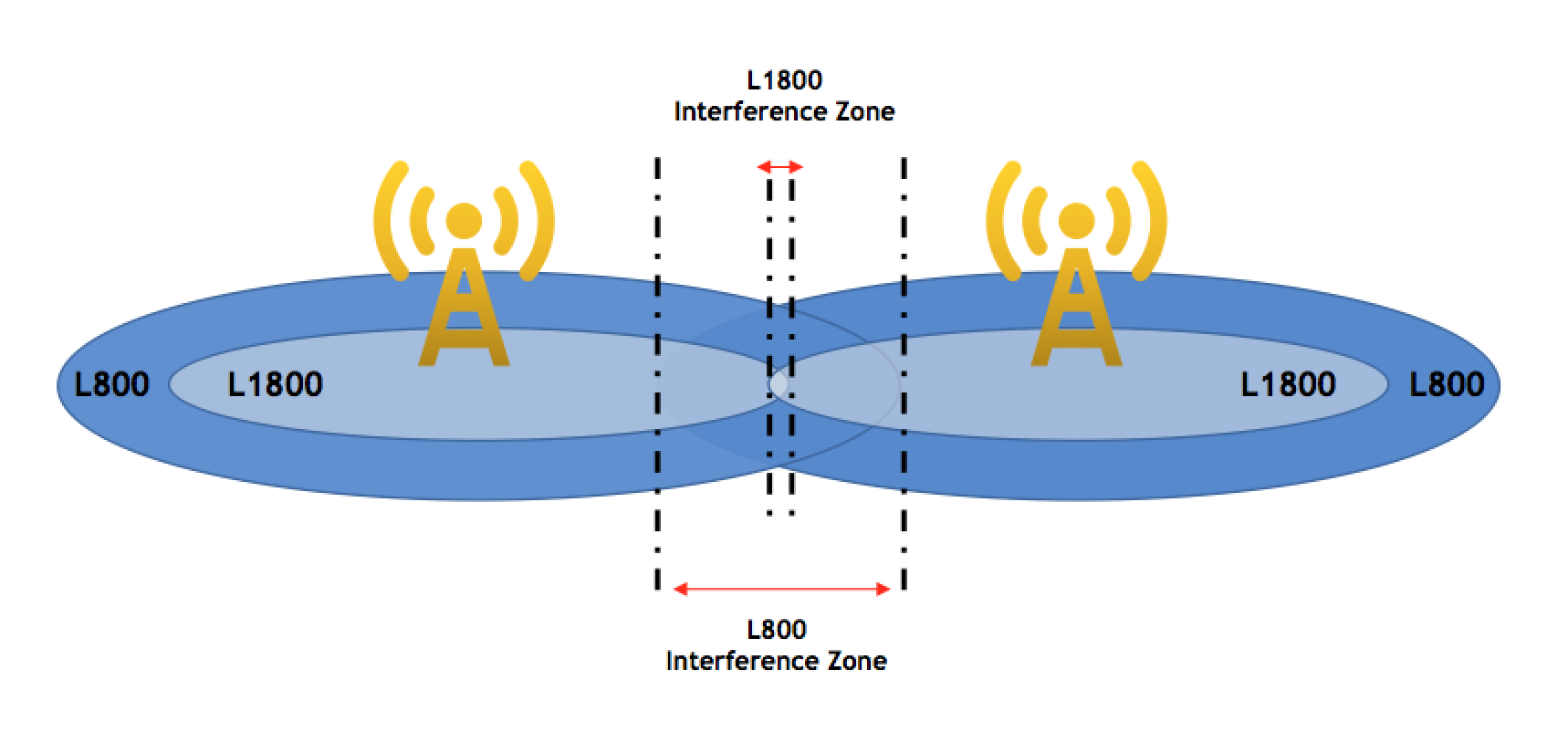

Transition to Higher CQI layer

Consider two LTE layers, for instance L800 and L1800 with same bandwidth. In this case, L800 will have a higher coverage as it is a lower frequency. So, the user count on L800 will be higher compared to L1800. However, the lower frequency layer also has higher interference since it has a bigger coverage radius. So, that will result in a lower CQI and a bad throughput. L1800 throughput will usually be better even with same bandwidth because it will have better CQI. So, the most important thing is to ensure that the layer with the better CQI gets most of the traffic. This can be done in many ways and I have jotted down a few of those.

The easiest way is to give a higher priority to L1800 and that will shift most of the UEs in L1800 coverage away from L800. This will ensure better CQI for users and thus a better throughput. Another way would be to keep them on same priority and provide a frequency offset to move the users to L1800. This is more reasonable if L1800 is also getting overloaded then the amount of load to be shifted can be tuned by varying the offsets.

I prefer load shifting by cell reselection instead of handovers. If the handover thresholds are changed or frequency priority based handovers are used, then it initiates gap periods. For UE, to move from one frequency to another frequency in connected mode, it needs to measure the target frequency. In order to measure the target frequency, the UE goes into a gap mode of 6 ms. This gap mode repeats itself after every 40 or 80ms. So, if it repeats every 40ms then that means that the UE cannot be scheduled for 6ms in every 40ms. Moreover, when the UE gets data, it needs to send a HARQ ACK/NACK after 4ms. So, it means that since the eNB knows that the UE will be in gap mode so the eNB will not schedule any data for the UE 4ms before the gap mode. That makes it 10ms in each 40ms that the UE cannot be scheduled which is around 25% of the time. So, inter-frequency handovers should be minimized as it can cause a 25% degradation in throughput. Cell reselection works in idle mode so it is a much better way to move users between the layers.

Load Balancing

Another way is to enable load balancing between the layers and ensuring that the higher CQI layer gets more load. Load balancing usually also comes in two modes

- Connected Mode: In this case, the eNB calculates the PRBs or user count and tries to maintain target load values by performing load based handovers between the layers.

- Idle Mode: In this case, the eNB sends the frequency in the RRC Release command to the UE. eNB increases the priority of the target frequency for that UE temporarily and the UE tries to reselect to that frequency in idle mode.

Once again, I prefer idle mode based load balancing as it does not introduce the inter-frequency handovers and also gets the work done. But idle mode based load balancing will not have significant impact in case the layers have different priorities since one layer already has higher priority and idle mode based load balancing also moves users by increasing the priority. So, if the UEs are not moving to higher priority layer than that means that the layer has coverage constraints and then the idle mode based load balancing will also be unable to shift the load.

Vertical Beam-Width

Another important factor is that many times, the low band like L800 has a bigger vertical beamwidth than the corresponding higher band. This effectively means that at the same tilt value, the L800 will have a much bigger coverage foot print than the L1800. So, before making any mobility strategy, it is important to verify the antenna patterns especially the vertical beam-width for all the layers. If the beam-width of one layer is significantly wider than the other, then ensure to put a tilt offset between the two to keep an optimum and balanced coverage.

– Scheduler Fairness:

Another important factor is the scheduler type. A scheduler can work in multiple modes

Round Robin: In this mode, the scheduler provides equal resources to all users. This is not an optimum algorithm as different users have different data requirements.

Max C/I: This mode provides significantly higher resources to users in good coverage conditions. This mode can starve the cell edge users and they will not get enough data resulting in degradation in user experience.

Proportion Fair: This scheme maintains a fairness between all users maintaining a healthy resource sharing between all user types. The basic concept of this mode is to strike a balance between users and it does that by prioritizing based on CQI and data rates. So, if the CQI is high, it will give resource to that user first but since it needs to maintain a fair data rate for all users, the cell edge users will also be scheduled. This scheme is essentially a combination of both round robin and Max C/I as it provides more resources to users with higher CQI as compared to round robin but it also provides more resources to cell edge users when compared to Max C/I. Hence, it gets the name Proportional fair.

The user throughput KPI improves with Max C/I scheduler as it provides more resources to good users resulting in higher user throughput but the cell throughput is improved with Proportional Fair algorithm as it strikes a balance between all users. So, if the user throughput KPI is to be improved then the scheduler can be tilted towards Max C/I while Proportional Fair can be used if cell throughput gain is required. The optimization at this level really needs deep understanding of the scheduler’s algorithm and it also depends if the specific vendor provides the options to play with the scheduling weights.

These are the basics to improve the spectral efficiency for a network. In the next part, I will explain the features that can be used to improve throughput along with the scenarios where they will be applicable.

In case of any queries or feedback, please drop a comment below and I would love to respond and help. Also, If you liked this article, then please subscribe to our Youtube channel – Our Technology Planet for more exciting stuff and videos.

Ali Khalid

Latest posts by Ali Khalid (see all)

- 5G Coverage Expansion Analysis – Find The Optimal 5G Coverage Threshold For Your Network - November 9, 2024

- 5G Coverage Expansion - November 9, 2024

- 5G SA Cell Search & Network Entry Matrix - July 18, 2023

Seamless Birth Certificate Translation Services

Unlock global opportunities with precision in birth Certificate Translation. Expert linguists ensure accurate, culturally sensitive translations, bridging languages seamlessly for official and personal use.

Efficient Solutions for MyStatLab Quizzes

Discover detailed, accurate MyStatLab quiz answers, tailored for students seeking to enhance their understanding and improve their grades in statistics. Ideal for quick, reliable study support.

Exploring the Digital World of Education

Dive into the realm of interactive learning with Cengage MindTap Answers. This digital landscape offers a myriad of educational resources, fostering a unique and engaging experience for students and educators alike, revolutionising the way knowledge is acquired and applied.

Hello Ali,

Very well explained, I have a question regarding spectral efficiency & BLER, I’m trying to improve the User throughput by implementing the DL Retransmission RB compression feature controlled by “ucDLRetrRBOptSwtch” parameter in ZTE equipment. Throughput is improving by this, but this is also increasing the BLER. My question is why the BLER is increased, although my throughput is improving and also the NACKs are decreasing.

Parameter:

ucDLRetrRBOptSwtch

Parameter Description:

This parameter enables or disables the optimization for the retransmission of RBs in the downlink to improve downlink spectrum efficiency. If it is set to Open, the RBs that are first retransmitted in the downlink with a NACK response received are compressed.

Hello Ali,

Thank you for your comment.

Please follow the link below and post your question/feedback in Youtube comments section so that Ali Khalid will be able to answer.

https://youtu.be/8fR5W4tDJjs

Beautifully Explained. Thank you

Hi Ali,

This is a very informative article. I greatly appreciate your effort.

Though I have a question.

Which RAN layer is responsible for making decision regarding MCS based on the CQI information received from the UE?

Hello Omair,

Thank you for your comment.

Please follow the link below and post your question/feedback in Youtube comments section so that Ali Khalid will be able to answer.

https://youtu.be/TbgOBoD7cNQ

i want to ask if i must measure sinr for every tti for every user in scheduling ? or it fixes along the process

if must how i will keep tracking the modulation index for every user in whole processing of scheduling

Hello Samar,

Thank you for your comment.

Please follow the link below and post your question/feedback in Youtube comments section so that Ali Khalid will be able to answer

LTE vs 5G SINR – https://youtu.be/lixbaIEPglQ

Hi Sir, It’s just a fantastic article. It covered a lot of things. I really appreciate your efforts. Thanks

Hi Ali , wonderful article and keep it up!

One question about adaptive target bler , what tradeoff this feature put toward us? because if we increase target bler

to high values then we expect high amount of retransmissions so latency and throughput will be degraded -more worse.

So how that feature can help in which scenarios? what’s the gain of using adaptive target Bler?

HI Ali, I have this doubt. If there are two cells radiating with same frequency and their coverage is overlapped. but there is no UE attached to any of the cell. Then will there be any interference in UL?

The uplink interference mostly comes from the UEs so if there are no Users on the cells, then they will not be interfering with each other in uplink.

Hi Mrs Ali and Haider, thank you for those valuable informations, please could you advice me a practical book for LTE optimization principles.

Do we have Bler calculation uplink PDCP Layer ?

No BLER is calculated at MAC

Any idea why DL_DRB throughput is higher than DL_Cell throughput in 700 layer?

If DRB throughput is higher than cell throughput then it usually indicates that average user per TTI is around 1 or less. If user per tti increases above 1 then DRB throughput starts getting lower than cell throughput.

Note : I am assuming that here the DRB throughput is [(dl traffic – dl traffic in last tti) / time excluding last tti]

Hi Ali,

both user throughput and cell throughput measured at the same layer ..

Thanks.

Yeah in LTE, most of the traffic related counters are pegged at PDCP

Thank you Mr.Ali

thank you Mr. Alim

pleae to do another article about the Pa & Pb

Thanks Ali for breaking down not-so-easy topics to very understandable levels.

You are welcome 🙂

Nice article. Can you also post something about how to improve RTP Gap%?

Dear Khalid.

Thanks for your ultimate Post on throughput. u r ultimate.

dear Khalid Please explain to me for huawei parameter DlVarIBLERtargetSwitch and CqiAdjInitialStep , how they work.

and please post your Part-3 on throughput.

The variable BLER is an enhancement on the original outer loop. Originally BLER of 10% was used but with this, the BLER target can vary between 30% and 10%. The basic idea is that low CQI users can use a higher BLER target to optimize resource efficiency. It also adds a criterion about packet size based on TBS to the algorithm such that smaller packets get assigned to higher BLER target.

AOA, Can we distinguish Small Packet and Large packet TBS through any counter? Or can we Tune IBLER to higher value for Low CQI specific Cells to Improve DL Throughput.?

I think you can just try the enhanced DL IBER variable target switches. The definition of small packet can be vendor specific.

Dear Khalid, for huawei there parameter DlVarIBLERtargetSwitch and it can vary from 30% to 10%.

How it works ? I mean how the value of BlerTrget which is from 10% to 30% chosen? according to what and does eNB assign that DlVarIBLERtargetSwitch to be a certain value from 10% to 30%?

Please explain to me for huawei parameter DlVarIBLERtargetSwitch how it works and how the value of DlVarIBLERtargetSwitch chosen/determined?

Thank you Mr.Ali for sharing this article , Really got benifits and expecting more in future.

Glad to see that it helped 🙂

hi,

is initial BLER usually too much high than residential BLER?

yeah, usually initial BLER is around 10% while the residual bler should be less than 1%

Aoa,

“In FDD networks, it is better to ensure that time synchronization is not enabled”, is there any switch to enable it?

Aoa,

“In FDD networks, it is better to ensure that time synchronization is not enabled” , is there any for switch to enable/disable this?

Jazakallahukhairan ali bhai for article

H Ali,

Thank you very much.

It is very well-drafted information, valuable and still simple.

Can i please request to share some information about root sequences, how we can describe the length of 837 codes, is it no of bit or what , the relation of cyclic shift, how large cell radius consumes more codes.

like whole RACH sequence planing, i know you will describe this with the same level of power of simplicity, many will take benefit out of it .., it is just a request if you can take out some time out of your busy schedule.

Yeah sure, I will add this to my list of upcoming articles 🙂

Thanks for the write-up. One question regarding to Pb and Pa:

“Moreover, the PDCCH/PDSCH symbols in which Reference Signals are present will have a slightly higher power for 2 and 4 antenna port systems”

The question is, are Pb and Pa values applied to all antenna ports or each port has their Pb and Pa values?

logically Pa and Pb are Port A and Port B so one for each port ?

Im interested in this as I have the issue of a strong signal an a weak signal from different towers. I’d like to be able to adjust this to the 0:0 setting and see if it helps. the weaker signal is LTE and gives me LTE-A and 2 frequency channels ( i guess ports). are these adjustments available in UI , consol interface or do they have to be patched. I have a ZYXEL

Very Good & well Explained Ali.

Thanks

Thanks Dheeraj 🙂

AOA Ali bhai,

jazakallah for such brief documentation. can you please share the path of “These are the basics to improve the spectral efficiency for a network. In the next part, I will explain the features that can be used to improve throughput along with the scenarios where they will be applicable”

as i am unable to find next part.

Have not published that yet 🙂

Thanks Ali Khalid….for the detailed analysis.

Can you suggest some parameters to check for UL throughput also.

Thanks Ali Khalid…

Just need more information about the Pa & Pb relationship.

Hi,

I have a query w.r.t last tti being omitted for throughput calculation, well it goes well if we have normal TDD cell, but when we have M-MIMO cell where we have many users being scheduled for single TTI, we might end up in a situation where last TTI may have large amount of data and we might not get the exact user thpt. So if we start considering last tti as well our actual thpt might increase in that case. Do u guys have more experience around this…

Regards

Munish

nice explanation

good doc good explained easy to understand

why not go for a book Ali

there is also LTE Frequency Selective Scheduling witch is using siteband cqi reports

are you aware if Huawei or any manufacturer is supporting this

could not find anything in Atoll

regards

Francesco

Thanks Francesco. And FSS is supported by almost all the vendors. But usually now it is only being used in uplink and not in downlink (atleast in most of the networks).

Dear Ali,

how do u think about cqi optimization only taken by system not by drive test report . Is it a true way to get good coverage

Yeah if you can get RSRP and CQI from the system (for instance, a Geo solution), then it depicts the actual user’s coverage and quality so it can be considered as a true picture and can also be used to perform optimization. The concept of MDT (Minimization of Drive Test) is gaining popularity.

Hi,

Thanks for the explanation!!!

When inter-site distance is low

Current condition PA = -3dB, PB = 1, RS power = RS

Planned condition PA = 0dB, PB = 0, RS power = RS – 3dB

with the planned changes, RS power will be reduced and it will reduce only the CRS SINR.

How does the PDSCH SINR/Thrp increase with this change? is it due to type B REs power increment?

Yeah, it is due to type-B and also the impact of RS interference reduces overall.

Hi,

Great and simple. Thanks for very quick and efficient overview of key points. It will be very nice to read your comments about Carrier Aggregation.

Thanks Vladimir. I will write on CA soon as well.

You write in great details with nice and easy explanations. However I have a request you to explain about PDSCH REs .

That is if by definition 1 RB is 84 REs (i.e 7 symbols in 1 slot) . And there are 2 slot per sub frames then we say that there is only 100 RBs(in 20 MHz) whereas as per definition It should be 200 RBs.

Second Question :

For PUCCH RBs are allocated diagonally in slots. That is RB is used in both slot . So now we have 100 RBs or 200 ??

PDSCH REs = (Control REs) ,here PDSCH REs should be divisible by 84 ???As remaining REs should be multiple of RBs ??

Khalid ji,

Great explanation !!

But i need to know more about the CQI convergence?

Any cons if we reduce the CQI convergence threshold?

Can you any parameter that can be tuned for adjusting this threshold (in Ercs, Huawei, Nokia and ZTE vendors)?

Thanks

Perfect Explanation, Ali Khalid

Thanks Faisal 🙂

hi, very informative…plz share congestion types in lte & resolution

Good information & analysis. I request you to suggest the Parameter Abbreviated names too in Nokia system for modification. So, it will be very easy to implement the changes.

Thanks Khalid ji for your valuable information

In FDD networks, it is better to ensure that time synchronization is not enabled as that adds a randomness to the system and PCI mod3 impact is reduced significantly.

In IP RAN CA Scenario , we need to have Time sycnronization either by GPS or an IP clock , will that time synchronization enabling introduce Moudlo3 interference.

In all such cases, where time synchronization is introduced, PCI mod3 impact will be seen.

First of all thank you for useful information.

I’ve some confusion understanding PDSCH power & ref signal power considering below 3 scenario. If you can please help or confirm below 2 points?

i.e. Case1. 5 MHz Channel with Ref Signal Power of 18 dBm with Paoffset=dB0 & Pboffset=1 (Total Max DL power=43dBm)

Case2. 10 MHz Channel with Red Signal Power of 18dBm with Paoffset=dB0 & Pboffset=0 (Total Max DL power=46dBm)

Case3. 10 MHz Channel with Ref Signal Power of 18 dBm with Paoffset= -dB3 & Pboffset=1 (Total Max DL power = 43dBm)

As per me,

Ref Signal Power is same as 18dBm, coverage/footprint will remain same in all 3 cases?

DL throughput will be higher in Case2>Case3>Case1 (Case3 will have 3 db less PDSCH Power compare to Case2 but still higher than Case1 due to increased bandwidth) ?

Yeah the coverage is based on RS power so it will be similar while the PDSCH power usually drives the throughput. So, your understanding is correct.

Thanks Ali for this very good article.. its worth reading. I have one query like in a nw 3 layers are there. 20m 10m and 5m. Aim.is to make traffic pattern as 50 :30:20.. but 5m is more loaded aftr enabling mlb.. wht could be optimum threshold to do so..

MLB strategy has a lot of variables so it is difficult to provide a single value for such a threshold. Personally, I try to use the mobility strategy to do the load balancing in idle mode.

HI Ali,

Idle Mode Load Balancing is Good But Not very Much Effective due Insufficient Coverage Overlapping between Different Frequencies as compared to connected Mode Load Balancing which is very much effective.

My Question is whether MLB Works for VOLTE calls as well?

High UL RSSI value impacts DL Throughput/UL Throughput?

What are the Pros and Cons of increasing the Target BLER% or decreasing the Target BLER%

Increasing BLER target means that the MCS will increase. So, it can improve throughput however depending on channel conditions and data volume, optimum BLER target can vary. If the BLER target is too high, then MCS will be increased but due to excessive retransmissions, the throughput and latency can be degraded.

Thanks Ali , what will happen if we will decrease the Target BLER

Call drop rate will increase right?

Yeah that is also a possibility

Dear

In my Network, subscribers initially QCI9 is assigned and then re-configured to QCI6/7/8 does Impact the latency ? Thanks.

Dear

Thanks for this post.

I have a question about throughput。36.314 4.1.6.1about Scheduled IP Throughput in DL,why excluding transmission of the last piece of data in a data burst?what is the last piece of data in a data burst?what the difference between Scheduled IP Throughput in DL and the amount of PDCP traffic in the networkOSS(except for the QCI dimension)?

I just got into contact,and expect an explanation ,thank u。

Let’s understand the basics behind this issue. Consider that a traffic of 83 bytes came for a UE. Lets assume that the eNB can transfer 20 bytes per subframe or TTI. The eNB will transfer this 83 bytes to the UE in 5 TTIs such as 20 + 20 + 20 + 20 + 3. Now, the throughput would be 83/5 = 16.6 bytes per ms. However, the air interface capability was 20 bytes per ms but the throughput is degraded due to small data value in the last TTI. Therefore, it was decided to give the accurate picture of the air interface throughput, the last TTI was removed from both numerator and denominator. The new throughput would be (83-3)/(5-1) = 80/4 = 20 bytes per ms.

The 3 bytes that are removed here are pegged in the PDCP traffic volume.

Not long after the reading agreement,thanks much。

Hi Ali,

How much is maximum total bytes can be sent per tti if i got modulation 64 qam?

In LTE, 64QAM has multiple MCS indexes and you can get the exact number of bits per TTI for each MCS/TBS from 3GPP 36213 document.

If you change the Pa=-3 and Pb=1 to Pa and Pb to 0 you will reduce the coverage? Why? Please could you explain that?

In simple words, RS power is proportional to Pb value. If Pb is reduced RS power is reduced. Coverage is based on RSRP so if RS power is reduced, then that will result in reduction of RSRP and consequently a loss in coverage.

Ok. Thanks. Just one more question. How calculate Pa=-3 in linear 1/2?

Thanks Ali.

How can I convert the Pa=-3 in linear 1/2 and Pa=0 in linear 1?

Let me explain the basics behind Pa=0 and -3. Usually when there are 2 Tx and 2 CRS ports, then the RS of port 2 are DTXed on port 1 and vice versa. So, RS of port 1 can increase power by taking the power of the DTXed REs. In this case, the RS will be 3 dB higher than other REs. This means that the PDSCH will be at -3 and usually we use the config of Pa=-3 in this case.

However, if there is only one CRS port then there will be no DTXed REs and RS power will not be boosted. In such case, the RS power will be equal to PDSCH symbols and therefore, the PA=0. These are the most common use cases.

Hi Ali,

If i add more boosted for example add + 6 db for rs , so i took power also from pdsch type b. Will it be reduce the throughput at all even for good signal received?

Yeah, under normal conditions, the power taken from PDSCH will reduce the PDSCH signal decoding capabilities so it should lower the throughput.

SIR,

Can you share me your valuable speech on MIMO topic.

Very useful article Ali. Enjoyed it as a casual read.

I would like to request some more information on the scheduling schemes, i.e. max C/I v/s Proportion Fair. What do the vendors normally prefer and what are the pros and cons of each? Considering dense deployments for 5G and possibility of user-centric networks, which scheme would be preferred and why?

Vendors usually go for a PF based scheduler that can have a bias towards Max C/I. As we are progressing, the vendors will provide more flexibility such that the scheduler can be tilted from PF to Max C/I.

Hi Ali Khalid. Is there any difference about how vendors (ie. Ericcson and Huawei) calculate the User Thr? I have 2 similar networks (similar cells, similar SGi TH, same users RRC), but Ericsson has less Network User Th than Huawei. Formula I think it is the same,

Huawei: (L.Thrp.bits.DL.QCI6- L.Thrp.bits.DL.LastTTI.QCI6) /L.Thrp.Time.DL.RmvLastTTI.QCI6

Ericcson: (pmPdcpVolDlDrb – pmPdcpVolDlDrbLastTTI) / pmUeThpTimeDl

I dont know wheter “pmUeThpTimeDl” includes the removing of TimeLastTTI

Firstly, the huawei formula you shared is for QCI-6 while Ericsson formula is for total traffic. So that should be corrected. The pmUeThpTimeDl should also remove last TTI.

Thanks I now gt it

Very Good Ali Bhai…

Thanks Sanjeev

Dear

Thanks for this post.

Could you please give us a brief explaination of SINR PUCCH/PUSCH and RSSI PUCCH/PUSCH ?

Untill now i didn’t get a correlation between SINR and RSSI. And please what’s the range of value of each indicator RSSI and SINR. Does SINR goes proportional with RSSI ? and from which value we can say that we have a problem ?

Finaly what’s the equivalent of UL Interference (RTWP 3G) in LTE ?

This information is quite important for us as NPO.

This will be covered in one of the short articles explaining the RSRP, RSRQ, SINR and RSSI.

Thanks a lot. Please include max of channel PUCCH, PUSCH, ..

Briefly explained in a very easier way. Its showing your professionalism and experience in the field. we are expecting more such type of excellent in future.

Thanks Aiman 🙂

Dear,

As always a very good article.

Could you please explain if UE report CQI based on mapping with SINR or report SINR and CQI together ?

because i have a counter of SINR reported by the UE (and CQI also) by range.

“– CQI & MCS Mapping:

The next step is the CQI (Channel Quality Indicator). Once the UE measures it’s SINR, it will convert it to a CQI value so it can report to the eNB”

UE reports CQI based on SINR. Kindly check the counter again, it might be related to uplink SINR as eNB calculates the uplink SINR itself and usually there are counters for that.

Thanks.

Does ENB calcules SINR in DL and send it to UE ?

No, SINR in DL is calculated by UE and UE sends a CQI based on that SINR to eNB. eNB only calculates SINR in UL. This is for FDD.

Hi Ali, in nokia there is calculation of max /ave user or rrc user connected . But the values sometimes is higher than what can pdcch bear in 1 tti. Please explain how the nokia count the user, is it per tti or how ?

Can you elaborate your question with an example?

For example i would like to check average user in hourly basis. For example the user average is 70 users. How it calculate meanwhile our smallest unit is TTI ?

As you said yourself, it is the average of the hour so it will be averaged over all the TTIs in that period or if the granularity is in seconds, it will be second based.

Very simply explained a difficult topic.Now it becomes more easy to understand. Thank you so much for time spent for knowledge sharing. Expecting more article in such a simple way to understand….☺☺

Thanks Tarun 🙂

Excellent and jazakALLAH for your efforts …

Waiting for the topic of MLB as there are many parameters need to be consider during the layer strategies..

Thanks again for valuable addition in knowledge

Thanks Sufyan 🙂

Many thanks for provide such excellent article, expect to read more article in the future.

Thanks for the kind words, Stanley 🙂

Brillant Article Thank you very much for Sharing Knowledge keep sharing.

You are welcome Shahroz 🙂

Hi,

Thank you for posting Nice article.

I want to know more on BLER.

How EnodeB or UE calculates BLER.

How it is calculated like whether it is 5 or 7 or 10%.

Each time eNB sends DL data, UE decodes it and responds with a HARQ feedback. If it is unable to decode the data, the UE will respond with a HARQ NACK so when the eNB gets a NACK, it will count it in BLER. Since, eNB knows the number of ACKs and NACKs received from each UE, it can calculate the target BLER as NACK over the sum of ACKs & NACKs.

Thanks for this post Ali. It was pretty helpful. I have a question related to BLER calculation.

Let say, you are sending 10 packets as follows:

i r i r i r i r i r (i=initial transmission, r=retransmission)

That means, you are sending retransmission after every initial transmission. When you check each packet, let say you get

1 0 1 0 1 0 1 0 1 0 (erroneous packets in initial transmissions but recovered in retransmission)

In this case, what is the BLER, 50% or 0%? I am asking this, it is because, in reality, if a packet is recovered in retransmission, device would throw the first packet away and process the retransmission. That means, all of the transmitted messages are recovered in this example. In this situation, I expect that BLER is 0%. However, I observe 50% BLER. Although all packets are recovered with HARQ, why do we still observe 50% BLER? I think I am missing something here.

(If you can provide a link with document (especially from 3GPP website) in your answer, that would be great.) Thanks in advance

The HARQ BLER should be 50% in your example while the R-BLER would be 0%. So, if you look at this from RLC layer, the BLER is 0% as all packets were successfully received. If you look from MAC/PHY, the BLER is 50%.

Thanks Ali. It is clear on my mind now.

I have one more quick question. In some of the applications (such as V2X), BLER threshold is defined as 10%. Does it refer to BLER in MAC/PHY or RLC layer? If your answer is MAC/PHY, may I learn why BLER in MAC/PHY is important? I am asking this, it is because if the packets are recovered in RLC layer, why do we still need to care BLER in MAC/PHY? This is consufing a bit.

The BLER is usually MAC/PHY based on Transport Blocks CRC checks. You are right that higher layers can recover packets but higher BLER means higher delay as it will need more retransmissions on MAC/PHY and will also result in more RLC level retransmissions. It also increases resource utilization.

I salute you

Simply Brilliant

For me the best article

“For instance, a UE which has a small amount of data accesses the cell and gets its data within two to three TTIs (subframes), then the eNB will not have enough CQI samples to converge quickly. The same UE will try again next time and the eNB will keep using a conservative CQI and MCS for such a UE. So, if the delta for initial CQI value is reduced, such UEs will get a less conservative CQI and MCS resulting in better data rates.”

Just to be clear that i understood correctly

When the same UE will try again after some time then again (considering small amount of data) eNB won’t have enough samples to converge the CQI towards the value initial CQI reported and that’s what you meant by eNB will keep using conservative CQI & MCS?

And in last statement you meant that CQI values are matured(delta for initial CQI value) then eNB will conserve to initial reported CQI meaning increase in MCS index hence improving throughput?

Yeah, you understood it correctly.

Thanks Ali for the valuable information.

I would like to know to increase MCS index if UE has small amount of data we should increase Initial Delta Cqi let’s say if it is -4 we can put it +2 to move more quickly to higher MCS Index?

thanks Ali..for this beautiful explanation..

Thanks Munzir 🙂

I have a request if you can kindly write an article on Scheduling covering every aspect of it

Clearly explained and very helpful artical.

Thanks Adnan 🙂